Practical Simple Linux Backup Solution – easy and reliable

Mostly so that I can remember how my backup routines work then I know what I’m looking for when it comes to a restore, here are my instructions for creating a backup solution that gives me certain features.

The features

I require:

- easily accessible copies of important data in the event that

- I delete something

- I change something that doesn’t become apparent until I can’t remember exactly what I did

- I need to refer back to something I once created but no longer have currently active in the current setup

- less easily accessible, but reliably available, copies of important data in the event that

- my disk fails

- the box fails

- something gets stolen, burnt, flooded or otherwise made unavailable

To do that I have created a routine to automate things.

The routine

- automatically takes a current copy of important data, timestamps it and saves it in another location

- automatically copies that copy to an online facility (using IDrive)

- automatically does some housekeeping – deletes files while archiving one copy per month

- automatically leaves me with a useful summary of what happened, what didn’t and what I think I’ll need to know but might forget in days and decades to come

For ease of readability for the rest of the article, it might be useful to know what constitutes important data.

The data

- MySQL – structures and data from all the databases on this single MySQL server

- Node-RED – flows, settings, certificates, credentials and static content

- Bash history – commands entered into the CLI since the box was installed

- DNS – BIND9 config files and zone files

- DHCP – isc-dhcp-server config files

- Scripts – the scripts that do the backing up in the first place

An overview of what goes on will also help.

An overview

- On an hourly basis a script runs (via a cron job) to take an incremental backup of the MySQL data, zip it up and put it (timestamped) in a local backups folder

- On a daily basis (overnight) a script runs (via a cron job) to:

- check whether there are any files from the first of the month and archive them in an Archive folder within the local backups folder

- take a full backup of the MySQL data, zip it up and put it (timestamped) in the local backups folder

- take a copy of each of the Node-RED files, bash history, DNS files, DHCP files and my scripts files, timestamp them and put them in the local backups folder

- check whether the last cloud backup was successful and, if it was, check for aged local files and delete them

- ie it checks (only known files – not a wildcard search) for aged files (age being dependent on file type) in the local backup folder and deletes them:

- MySQL full backups from the month that is 6 months prior to the current month (so ALL of August’s files are deleted the first time the script runs in February)

- MySQL incremental backups from 2 months prior (on 1/2/22 all files from Dec ’21)

- etc, etc, but note that any files from the first day of each month are already archived and so don’t get deleted

- ie it checks (only known files – not a wildcard search) for aged files (age being dependent on file type) in the local backup folder and deletes them:

- write a log containing:

- the date on which the script ran

- the fact that the script ran

- whether or not the test for a successful cloud backup returned error-free success or not (note that the online backup can complete successfully, fail to run, or complete with errors)

- whether or not the script executed the routine to check for and delete aged files (but not whether that deletion was successful)

- a bit of information about what is going on and where to look for more details in future when I’ve forgotten even that I wrote this stuff

- Also on a daily basis (overnight but separately to the script above) an IDrive scheduled backup runs to:

- take a copy of the local backups folder and store it online in the IDrive facility (IDrive setup notes are separately available here)

The full instructions

Create a folder for your backups on your Linux machine (which is assumed to be called ‘LinuxBox’ but replace that with whatever you like).

mkdir ~/LinuxBox

mkdir ~/LinuxBox/backups

mkdir ~/LinuxBox/backups/ArchiveDaily backup

Create a script to run daily to achieve the outcomes listed above. Save it in the user’s home folder (or wherever you like as long as you can run it from the right place later), and set permissions to allow it to be executed.

sudo touch ~/daily_backups.sh

sudo chmod 755 ~/daily_backups.sh

sudo nano ~/daily_backups.shIn the nano editor create the following content, then exit and save with Ctrl-X, yes, ENTER once complete. Note that the commands below are commented and <linux username> and <idrive_username> will need replacing with the relevant info. linux_username is your username on the linux machine – if you’ve set up your Linux box using any of the instructions here then you’ll have selected a username and know it. idrive_username is the username you use to log in to your IDrive account – this might make more sense if you’ve also followed the instructions below in The cloud job. If you don’t already have an IDrive account, read or follow that section first.

#!/bin/bash

# copy all first-of-the-month files into Archive (COPY!)

# do this first on the basis that this script ran yesterday, and the day before and since at least

# the first of this month, which will eventually be true

cp -p -u /home/<linux_username>/LinuxBox/backups/*$(date +"%y%m")01_* /home/<linux_username>/LinuxBox/backups/Archive/

# do the backups - copy any required/listed files into the backup folders and timestamp them (COPY!)

cp /home/<linux_username>/.node-red/flows_LinuxBox.json "/home/<linux_username>/LinuxBox/backups/Node-RED-flows_LinuxBox-$(date +"%y%m%d_%H%M%S").json"

cp /home/<linux_username>/.node-red/settings.js "/home/<linux_username>/LinuxBox/backups/Node-RED-settings-$(date +"%y%m%d_%H%M%S").js"

cp /home/<linux_username>/.node-red/flows_LinuxBox_cred.json "/home/<linux_username>/LinuxBox/backups/Node-RED-flows_LinuxBox_cred-$(date +"%y%m%d_%H%M%S").json"

cp -r /home/<linux_username>/.node-red/node-red-static/ /home/<linux_username>/LinuxBox/backups/Node-RED-static/

cp /home/<linux_username>/.bash_history "/home/<linux_username>/LinuxBox/backups/bash_history-$(date +"%y%m%d_%H%M%S")"

chmod 644 /home/chris/CoreC/backups/bash_history*

mysqldump --defaults-extra-file=/home/<linux_username>/mysqlpassword.cnf -u root --flush-logs --delete-master-logs --single-transaction --all-databases | gzip > /home/<linux_username>/LinuxBox/backups/MySQL-full_$(date +"%y%m%d_%H%M%S").gz

cp -r /etc/bind /home/<linux_username>/LinuxBox/backups/etc-bind/

cp /var/cache/bind/my-smart.haus.zone "/home/<linux_username>/LinuxBox/backups/var-cache-bind-my-smart.haus.zone-$(date +"%y%m%d_%H%M%S")"

cp /etc/dhcp/dhcpd.conf "/home/<linux_username>/LinuxBox/backups/etc-dhcp-dhcpd.conf-$(date +"%y%m%d_%H%M%S")"

cp /home/<linux_username>/daily_backups.sh "/home/<linux_username>/LinuxBox/backups/daily_backups-$(date +"%y%m%d_%H%M%S").sh"

cp /home/<linux_username>/hourly_backups.sh "/home/<linux_username>/LinuxBox/backups/hourly_backups-$(date +"%y%m%d_%H%M%S").sh"

cp /home/<linux_username>/IDrive_backup_status.sh "/home/<linux_username>/LinuxBox/backups/IDrive_backup_status-$(date +"%y%m%d_%H%M%S").sh"

# see LinuxBox /home/<linux_username>/IDrive_backup_status.sh for comments on the following...

# if latest IDrive backup contained no errors then delete local backups (from /backups but note first-of-the-month files are still in /backups/Archive)

# that are from the month 2 months earlier in the case of incremental MySQL backups (ie on 1st Feb that means anything from December (1st to 31st)), and

# that are from the month 6 months earlier in the case of full MySQL backups, and

# that are from the month 2 months earlier in the case of:

# Node-RED files other than static folder (do not remove *.js* from the match below),

# bash_history, dhcpd.conf, zone files

# (as long as they're all still being backed up with the filename structured as at time of writing)

if [[ $(ls -Art ~/IDriveForLinux/idriveIt/user_profile/chris/<idrive_username>/Backup/DefaultBackupSet/LOGS | tail -n 1 | grep _Success_ | wc -l) -eq 1 ]]

then

#echo Last IDrive backup was a SUCCESS with zero errors

rm -rf /home/<linux_username>/LinuxBox/backups/MySQL-full_$(date --date='-6 month' +"%y%m")*

rm -rf /home/<linux_username>/LinuxBox/backups/MySQL-inc_$(date --date='-2 month' +"%y%m")*

rm -rf /home/<linux_username>/LinuxBox/backups/Node-RED-*$(date --date='-2 month' +"%y%m")*.js*

rm -rf /home/<linux_username>/LinuxBox/backups/bash_history-$(date --date='-2 month' +"%y%m")*

rm -rf /home/<linux_username>/LinuxBox/backups/etc-dhcp-dhcpd.conf-$(date --date='-2 month' +"%y%m")*

rm -rf /home/<linux_username>/LinuxBox/backups/var-cache-bind-tinab.blog.zone-$(date --date='-2 month' +"%y%m")*

echo [$(date +"%y%m%d_%H%M%S")] >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo CBL backup script RAN >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo IDrive backup SUCCESS >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo Local files deletion routine RAN. This does not necessarily mean it succeeded. >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo ie the test for last backup SUCCESS returned TRUE - see commented bash script at >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo /home/<linux_username>/daily_backups.sh and more detailed at /home/<linux_username>/IDrive_backup_status.sh >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

else

#echo Last IDrive backup either failed, was a success with errors or was a MANUAL backup - ie wasn't the scheduled backup we're interested in

echo [$(date +"%y%m%d_%H%M%S")] >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo CBL backup script RAN >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo IDrive backup HAD ERRORS>> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo Local files deletion routine DID NOT RUN because IDrive backup had errors >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo ie the test for last backup success returned FALSE - see commented bash script at >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

echo /home/<linux_username>/daily_backups.sh and more detailed at /home/<linux_username>/IDrive_backup_status.sh >> /home/<linux_username>/LinuxBox/backups/backup_log_CBL_script.txt

#do nothing more

fiA cron job will be required to run this daily backup automatically. However, save doing that until the end. If it runs before all parts are set up there will be errors. See the last steps section below.

Hourly backup

Create a script to run hourly to make an incremental backup of the MySQL data since the last (daily) full backup, similarly to the daily routine above.

sudo touch ~/hourly_backups.sh

sudo chmod 755 ~/hourly_backups.sh

sudo nano ~/hourly_backups.shIn the nano editor create the following content, then exit and save with Ctrl-X, yes, ENTER once complete. Again, ensure <this_stuff> is applicable to your installation. Thanks to sqlbak for this routine.

#!/bin/bash

#path to directory with binary log files

binlogs_path=/var/log/mysql/

#path to backup storage directory

backup_folder=/home/<linux_username>/LinuxBox/backups

#start writing to new binary log file

mysql --defaults-extra-file=/home/<linux_username>/mysqlpassword.cnf -u root -E --execute='FLUSH BINARY LOGS;' mysql

#get list of binary log files

binlogs=$(mysql --defaults-extra-file=/home/<linux_username>/mysqlpassword.cnf -u root -E --execute='SHOW BINARY LOGS;' mysql | grep Log_name | sed -e 's/Log_name://g' -e 's/^[[:space:]]*//' -e 's/[[:space:]]*$//')

#get list of binary log for backup (all but the last one)

binlogs_without_Last=`echo "${binlogs}" | head -n -1`

#get the last active binary log file (which you do not have to copy)

binlog_Last=`echo "${binlogs}" | tail -n -1`

#form full path to binary log files

binlogs_fullPath=`echo "${binlogs_without_Last}" | xargs -I % echo $binlogs_path%`

#compress binary logs into archive

zip $backup_folder/MySQL-inc_$(date +"%y%m%d_%H%M%S").zip $binlogs_fullPath

#delete saved binary log files

echo $binlog_Last | xargs -I % mysql --defaults-extra-file=/home/<linux_username>/mysqlpassword.cnf -u root -E --execute='PURGE BINARY LOGS TO "%";' mysqlYou may notice that the above commands refer to a password file (mysqlpassword.cnf). This is to ensure the file does not contain the password in plain text. For the above to work, the password file must be created and secured so as to be accessible only with appropriate permissions…

sudo touch ~/mysqlpassword.cnf

sudo chmod 600 ~/mysqlpassword.cnf

sudo nano ~/mysqlpassword.cnfIn the nano editor create the following content, then exit and save with Ctrl-X, yes, ENTER once complete. Replace <your_password> with the root password for access to your MySQL databases

[mysqldump]

# the following password will be sent to mysqldump

password="<your_password>"

[mysql]

# the following password will be sent to mysql

password="<your_password>"Again a cron job will be required to run this hourly backup automatically. See the last steps section below so as to avoid having it run before all steps are complete.

The cloud job

In order to have the local backup files safely backed up to the cloud an IDrive account and setup is needed. Other services can be used but the above is intended for IDrive and would need amending to use with other online/cloud backup solutions.

Follow the separate instructions here to setup IDrive on your Linux machine and note the following when doing so:

- IDrive username

Once complete add the /home/<linux_username>/LinuxBox/backups folder to the DefaultBackupSet in your IDrive configuration as per the instructions here.

Last steps

To enable the MySQL backups to operate as above, a few steps are required just once.

Enable binary logs in MySQL by uncommenting ‘server-id’ and ‘log-bin’ in the conf file. Save and exit with Ctrl-X, yes, ENTER and restart MySQL

sudo nano /etc/mysql/mysql.conf.d/mysqld.cnf

sudo systemctl restart mysqlNext perform a single ‘initialisation’ backup which takes a full backup and sets up the binary logs to a state from which full and incremental backups can be taken

mysqldump --defaults-extra-file=/home/<linux_username>/mysqlpassword.cnf -u root --flush-logs --delete-master-logs --single-transaction --all-databases | gzip > /home/<linux_username>/LinuxBox/backups/MySQL-inc_$(date +"%y%m%d_%H%M%S").gzAlso, as indicated above but delayed so as to avoid automating tasks that we weren’t ready for at that point, create a cron job to run the daily backup automatically at 3.33am daily (or whatever time you like – it’s good practice to avoid round numbers to increase the chances of this running at the same time as other intensive jobs without having to keep track of all the tasks the machine does at set times) as the root user. To do so, edit the system-wide crontab

sudo nano /etc/crontaband add the following content below anything that’s already there, before saving and exiting with Ctrl-X, yes, ENTER

# m h dom mon dow user command

33 3 * * * root /home/<linux_username>/daily_backups.shFinally, add the hourly job to the crontab with the following line (which, in this case, runs at 17 minutes to every hour)

43 * * * * root /home/<linux_username>/hourly_backups.shOnce everything is done a few checks will give an indication of what’s going on.

After the first hourly backup check that an incremental file has been created by listing the contents of the backup folder

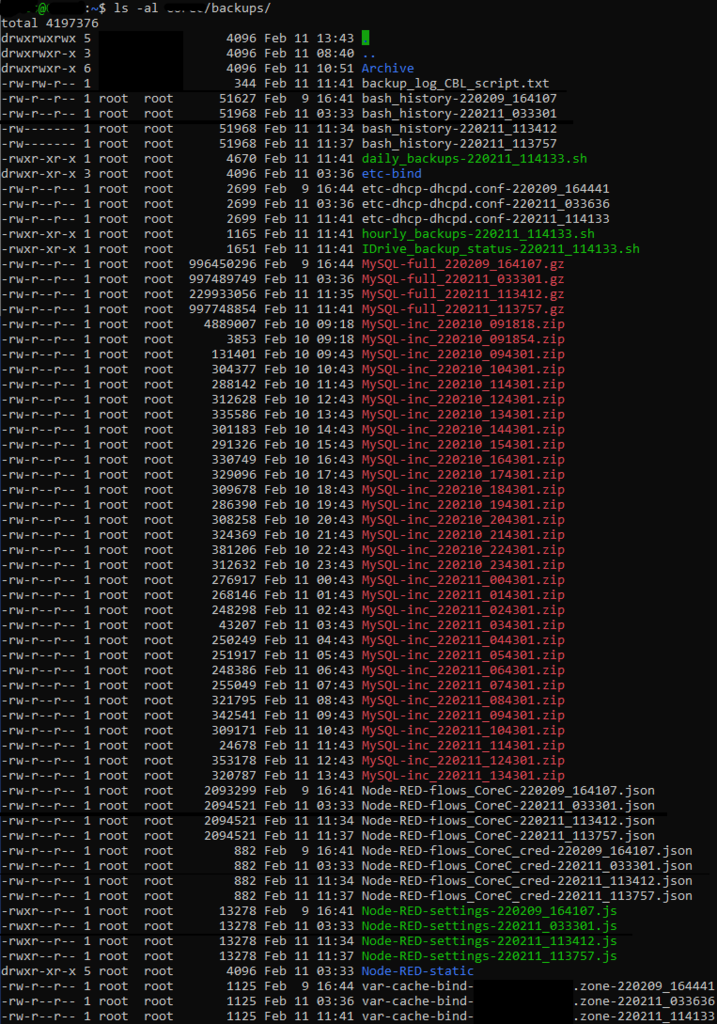

ls -al ~/LinuxBox/backupsto see that a file something like the following has been created

After the first daily backups there should be many more files

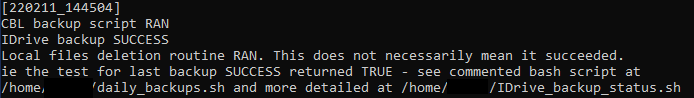

and the summary should have been written

If a daily IDrive scheduled backup has completed successfully, that should be evident in the Web interface at https://www.idrive.com/idrive/home/

On the first day of a month a file in the local backups folder should contain “01_” for each of the backed-up file types. On the second day (or at least the first time the daily script runs after a successful run on the first of a month) those files should be copied to the Archive folder

On the first day of the third month (ie the first day of the month after a complete month following a month with at least one successful daily script completing) some files should be deleted from local backups folder. For example, if a daily backup ran successfully on 15th December then on 1st February all files from December should be deleted (with the exception of files in the Archive folder) when that day’s daily script executes.

Finally

Note the above relies on my assumptions being correct…

- that ~/IDriveForLinux/idriveIt/user_profile/<linux_username>/<idrive_username>/Backup/DefaultBackupSet/LOGS contains a file with a name containing _Success_ when a backup had no errors

and _Success*_ or _SOMETHINGELSE_ otherwise

This is true at the time of writing but relies on IDrive development to remain the case.